In our previous posts we have discussed Image inversion and Gradient. Today we will discuss about masking. We will also conclude Reflection example provided by Apple. Source code of our example is available at github. Please feel free to modify or test this code on your machine.

Image Masking:

I believe we are all familiar with the idea of bit masking. In bit masking we change bits in input data to on, off or invert by applying bit operators. For example if input bits are 0110 and if we apply OR bit operator on this data using some mask such as 1111 then the result of this operation would be 1111. Similarly in Image mask we apply a mask on a given image to switch on, off or modify part of the image. In iOS there are different techniques to mask an image.

Masking using an image mask

A bitmap image mask contains sample values (S) at each point corresponding to an image. Each sample value is represented through 1, 2, 4 or 8 bits per components and it specifies the amount that the current fill color is masked at each location in the image.

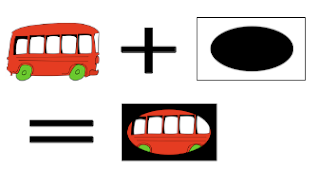

Lets take an example to understand image masking. Suppose we have an image of a bus and we only want to display part of it. To obtain this, we mask bus image with an image mask as shown in figure 1:

As you can see in figure1 our original image is a bus image. We mask this image with an image mask and in result we get an image which shows portion of the bus and hides rest of the image. You might have noticed that our image mask is composed of ellipse filled with black color and surrounded by white rectangle. In the output image only that portion of the original image is visible which is masked by black ellipse in the image mask. The area which is masked by surrounding white rectangle in the image mask is hidden in the result image. The black color in the output image represents unpainted area and could be filled by any background color.

So how does it work? Image mask works as an inverse alpha value (1-S), where S is the sample value of a point in mask image. To recall alpha value 0 means opaque and 1 means transparent. In image mask sample value (S) works as follows:

We first create a gray scale image with black ellipse and surrounding white rectangle.

Masking using an image

Instead of using an image mask we can mask an image with another image. When an image is used as a mask its sample value (S) per component behaves as an alpha value.

Similar to Figure 1 original image is a bus image and output image shows portion of bus with unpainted area around it. The only difference is that in figure 1 image mask has black ellipse in centre and white rectangle around it. In Figure 4 image which is used as a mask has white ellipse in centre and black rectangle around it. Because we are using an image instead of image mask for masking therefore masking behaves as an alpha value. White rectangle in centre has sample value 1.0 which means its alpha value is 1 and therefore sample values in original image in that portion are visible in the output image. Black rectangle has sample value 0.0 therefore its alpha value is 0 and hence sample values in original image are blocked in the output image. Implementation of this code is very simple in iOS.

We first create a gray scale image with white ellipse and surrounding black rectangle.

Masking using colors

We can mask a particular color or range of colors from an image by function CGImageCreateWithMaskingColors. For example if we want to change the color of wheels in our original image we can do it by masking original image with range of green colors as shown in figure 4.

In figure 5 we have masked original bus image with range of green colors. These colors start from green to light green. Based on this input CGImageCreateWithMaskingColors removes range of green colors from input image. We have left area of wheels unpainted but we can fill these colors with any color of our choice. Now we will look at the code and the output is shown in figure 6.

We can now mask our image with color components as follows:

Masking using CGContextClip

CGContextClipToMask function is used to map a mask into a rectangle and intersect it with the current clipping area of a graphics context. This function takes three parameters.

We will only discuss reflected image code as we have already covered gradient in our previous post.

I hope the series of posts related to image reflection might have been useful to describe image inversion, gradient and masking concepts. Please feel free to provide your comments and feedback.

Image Masking:

I believe we are all familiar with the idea of bit masking. In bit masking we change bits in input data to on, off or invert by applying bit operators. For example if input bits are 0110 and if we apply OR bit operator on this data using some mask such as 1111 then the result of this operation would be 1111. Similarly in Image mask we apply a mask on a given image to switch on, off or modify part of the image. In iOS there are different techniques to mask an image.

- Masking using an image mask.

- Masking using an image.

- Masking using colors.

- Masking using Context clip.

Masking using an image mask

A bitmap image mask contains sample values (S) at each point corresponding to an image. Each sample value is represented through 1, 2, 4 or 8 bits per components and it specifies the amount that the current fill color is masked at each location in the image.

Lets take an example to understand image masking. Suppose we have an image of a bus and we only want to display part of it. To obtain this, we mask bus image with an image mask as shown in figure 1:

|

| Figure1: Bus image is masked with image mask. (a)original image (b) image mask (c) masked image. |

As you can see in figure1 our original image is a bus image. We mask this image with an image mask and in result we get an image which shows portion of the bus and hides rest of the image. You might have noticed that our image mask is composed of ellipse filled with black color and surrounded by white rectangle. In the output image only that portion of the original image is visible which is masked by black ellipse in the image mask. The area which is masked by surrounding white rectangle in the image mask is hidden in the result image. The black color in the output image represents unpainted area and could be filled by any background color.

So how does it work? Image mask works as an inverse alpha value (1-S), where S is the sample value of a point in mask image. To recall alpha value 0 means opaque and 1 means transparent. In image mask sample value (S) works as follows:

- 0 means transparent. In this case the pixel or point in original image is visible on the output image. Note that alpha value is 1 i.e 1-S = 1.

- 1 means opaque. In this case the pixel or point in original image is blocked on the output image. Note that alpha value is 0. i.e 1-S = 0.

- Any value greater than 0 and less than 1 allows pixel or point in original image based on inverse alpha value. If S is 0.3 then alpha value is 1 - 0.3 = 0.7. Which means pixel would be 70% transparent on the output image.

|

| Figure 2: Masking using an image mask (a) Original image (b) masked image. |

UIImage* grayScaleImage = getGrayScaleImage(originalImage);We have discussed gray scale images in our previous post. After creating gray scale we create an image mask.

CGFloat width = CGImageGetWidth(grayScaleImage.CGImage);

CGFloat height = CGImageGetHeight(grayScaleImage.CGImage);

CGFloat bitsPerPixel = CGImageGetBitsPerPixel(grayScaleImage.CGImage);

CGFloat bytesPerRow = CGImageGetBytesPerRow(grayScaleImage.CGImage);

CGDataProviderRef providerRef = CGImageGetDataProvider(grayScaleImage.CGImage);

CGImageRef imageMask = CGImageMaskCreate(width, height, 8, bitsPerPixel, bytesPerRow, providerRef, NULL, false);

Creating an image mask is very simple in iOS. First we obtain parameter values for CGImageMaskCreate function using standard functions such as CGImageGetWidth, CGImageGetHeight and CGImageGetBytesPerRow etc. After this we call function CGImageMaskCreate which produces image mask. We now have both original image and image mask. In order to mask original image with image mask we call function CGImageCreateWithMask.CGImageRef maskedImage = CGImageCreateWithMask(originalImage.CGImage, imageMask);

CGImageRelease(imageMask);

UIImage* image = [UIImage imageWithCGImage:maskedImage];

CGImageRelease(maskedImage);

CGImageCreateWithMask takes two parameters original image and imageMask and it returns masked image. We wrap masked image in UIImage object and release masked image reference. If we want to paint unpainted area in our output/masked image then we can draw our masked image in a bitmap context which is filled with background color. I have not included this in the source code but you can use following function to get an output as shown in figure 3.-(CGImageRef)fillBackgroundColorInImage:(CGImageRef)image imageWidth:(CGFloat)imageWidth imageHeight:(CGFloat)imageHeight{

//create RGB color space.

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

// create a bitmap graphics context the size of the image with height given in the function parameter.

CGContextRef bitmapContext = CGBitmapContextCreate(NULL, imageWidth, imageHeight, 8, 0, colorSpace, (kCGBitmapByteOrder32Little | kCGImageAlphaPremultipliedFirst));

CGColorSpaceRelease(colorSpace);

//draw image in the context.

CGRect imageBounds = CGRectMake(0.0, 0.0, imageWidth, imageHeight);

//set background color with blue color.

CGContextSetFillColorWithColor(bitmapContext, [UIColor blueColor].CGColor);

CGContextFillRect(bitmapContext, imageBounds);

//draw image in the context.

CGContextDrawImage(bitmapContext,imageBounds, image);

//Get the image from the context.

CGImageRef filledImage = CGBitmapContextCreateImage(bitmapContext);

CGContextRelease(bitmapContext);

//autorelease filledImage.

if (filledImage){

filledImage = (CGImageRef)[(id)filledImage autorelease];

}

return filledImage;

}

This function takes following inputs:- Image. In our case this is the output image which we get after masking original image with image mask or another image.

- Width of input image.

- Height of input image.

|

| Figure 3: Masked image with background color. |

Masking using an image

Instead of using an image mask we can mask an image with another image. When an image is used as a mask its sample value (S) per component behaves as an alpha value.

- 0 means opaque. In this case the pixel or point in original image is blocked on the output image. Alpha value is 0.

- 1 means transparent. In this case the pixel or point in original image is visible on the output image. Alpha value is 1.

- Any value greater than 0 and less than 1 allows pixel or point in original image based on alpha value. If S is 0.3 then alpha value 0.3. This means that pixel would be 30% transparent on the output image.

|

| Figure 4: Masking using an image (a) original image (b) image used as a mask (c) masked image |

We first create a gray scale image with white ellipse and surrounding black rectangle.

UIImage* grayScaleImage = [self getGrayScaleImage:originalImage];We now mask original image with gray scale image to get output image.

CGImageRef maskedImage = CGImageCreateWithMask(originalImage.CGImage, grayScaleImage.CGImage);

UIImage* image = [UIImage imageWithCGImage:maskedImage];

CGImageRelease(maskedImage);

We call CGImageCreateWithMask function to get the masked image. This function takes two parameters. First original image and second an image which is used as a mask. The output is the masked image.Masking using colors

We can mask a particular color or range of colors from an image by function CGImageCreateWithMaskingColors. For example if we want to change the color of wheels in our original image we can do it by masking original image with range of green colors as shown in figure 4.

|

| Figure 5: Masking using colors (a) original image (b) range of green colors (c) masked image. |

//range of mask colors in the format min[0], max[0], min[1], max[1]...

float maskingColors[6] = {0, 100, 150, 255 , 0, 100};

First we define range of color components. The range of colors components should be twice of color components in the original image. For example RGB bitmap contains 3 color components which are Red, Green and Blue. Therefore we will need to define an array with six components in format min[0], max[0], min[1], max[1], min[2], max[2]. In order to define a range of colors starting from green (0, 150, 0 ) to light green (100, 255, 100) our array will look like as: { 0, 100, 150, 255, 0, 100}.We can now mask our image with color components as follows:

//mask original image with mask colors.

CGImageRef maskedImageRef = CGImageCreateWithMaskingColors(originalImage.CGImage, maskingColors);

It is very simple to create mask using CGImageCreateWithMaskingColors. It takes two parameters. First parameter is the image which needs to be masked and second parameter is array of color components. Our code output looks like as shown in figure 6. |

| Figure 6: Masking using colors (a) original image (b) masked image. |

CGContextClipToMask function is used to map a mask into a rectangle and intersect it with the current clipping area of a graphics context. This function takes three parameters.

- Reference to graphics context which we want to clip.

- A rectangle to which we want to apply the mask.

- An image mask which is created through CGImageMaskCreate or an image which is created through Quartz function as we have done in Masking using an image.

- Create a bitmap image. Draw a linear gray scale gradient in this image. The color at the start position of the gradient is black and the color at the end position is white. The colors in between start and end positions are gray scale values.

- Create a bitmap context of the size of the reflection which we want to display.

- Use CGContextClipToMask with three parameters. First, reference to the bitmap context created in step 2. Second, rectangle with the size of the reflected image and third gradient image generated in step 1.

- Draw original image which we want to reflect in the bitmap context. When we draw an image it will be clipped to the rectangle and masked with the image which we have generated in step 1.

|

| Figure 7: Image reflection |

// create a bitmap graphics context the size of the image CGContextRef mainViewContentContext = MyCreateBitmapContext(fromImage.bounds.size.width, height);We create bitmap context which is of same width as original image and of height same as the amount of image we want to reflect.

// create a 2 bit CGImage containing a gradient that will be used for masking the // main view content to create the 'fade' of the reflection. The CGImageCreateWithMask // function will stretch the bitmap image as required, so we can create a 1 pixel wide gradient CGImageRef gradientMaskImage = CreateGradientImage(1, height);We create a gray scale gradient. The color at the start location of gradient is black and the color at the end location is white. The area in between start and end locations is filled with gray shades. You might have noticed that we are only creating a gradient of width 1 pixel and of height same as amount of reflection we want to display. The reason why we created 1 pixel wide gradient is that during masking CGContextClipToMask function will automatically stretch it to the size of the rectangle provided in CGContextClipToMask parameter.

// create an image by masking the bitmap of the mainView content with the gradient view // then release the pre-masked content bitmap and the gradient bitmap CGContextClipToMask(mainViewContentContext, CGRectMake(0.0, 0.0, fromImage.bounds.size.width, height), gradientMaskImage); CGImageRelease(gradientMaskImage);CGContextClipToMask clips rectangle path in the bitmap context and copies gradientMaskImage as a mask in that rectangle.

// In order to grab the part of the image that we want to render, we move the context origin to the // height of the image that we want to capture, then we flip the context so that the image draws upside down. CGContextTranslateCTM(mainViewContentContext, 0.0, height); CGContextScaleCTM(mainViewContentContext, 1.0, -1.0); // draw the image into the bitmap context CGContextDrawImage(mainViewContentContext, fromImage.bounds, fromImage.image.CGImage);When the image is drawn into bitmap context it is masked with the mask image and clipped to the rectangle as provided in CGContextClipToMask function. The output image can now be obtained through CGBitmapContextCreateImage as shown below.

// create CGImageRef of the main view bitmap content, and then release that bitmap context CGImageRef reflectionImage = CGBitmapContextCreateImage(mainViewContentContext); CGContextRelease(mainViewContentContext);The output image can be added to image view or can be drawn directly to a view.

I hope the series of posts related to image reflection might have been useful to describe image inversion, gradient and masking concepts. Please feel free to provide your comments and feedback.

I wanted to thank you for this excellent read!! I definitely loved every little bit of it.Cheers for the info!!!!

ReplyDeleteimage masking service

Here is a very good tutorial "How to mask image in iOS"

ReplyDeleteFollow the link

http://www.abdus.me/ios-programming-tips/how-to-mask-image-in-ios-an-image-masking-technique/

Shakir, thank you for this wonderful walkthrough. As a developer new to IOS development but familiar with graphics programming, this guide is invaluable to me. Cheers!

ReplyDeletereally very helpful post, now i'm clear on masking and i have to do it. Thank you

ReplyDelete